With the rise of Character AI, many similar AI chatbot services have emerged in the market.

Most of these chatbots can only use NLP technology to reply to text messages, simulating text messaging scenarios.

However, with the rapid development of AI multimodal technology, many AI chat services can now handle text, image, and voice inputs and outputs.

This means that some chatbots can not only reply to your text messages but also send you images and even voice messages, greatly enhancing the immersive experience of chatting.

But people always wonder if one day we can achieve AI video chat, just like FaceTiming with a real person.

This article will introduce two ways to achieve AI video chat that I know of, and the APPs that offer this feature.

How is AI Video Chat Possible?

The so-called “Video Chat” doesn’t actually mean that the other side records an actual video and sends it to you.

Instead, it uses AI to generate real-time video.

This is similar to the mechanism of AI image generation, but it requires the AI model to:

1. Generate continuous frames of the character, ensuring a high degree of similarity with the character’s appearance.

2. Include the character’s voice in the video, maintaining consistent tone and responding to your previous inputs.

How does AI Video Chat work?

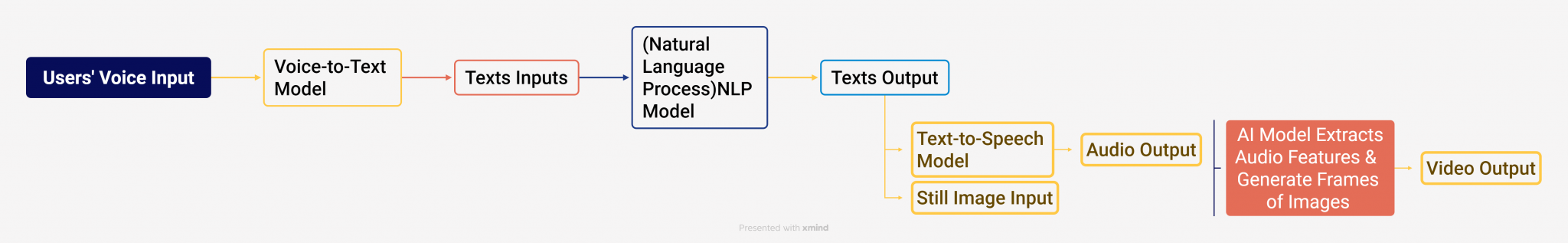

Engaging in video chat with AI involves the input of text, voice, and images, as well as the AI model processing this data to generate corresponding audio and video frames.

In AI Video Chat, the AI works through the following steps:

1. The AI model receives the user’s voice input.

2. The model converts the voice input into text.

3. An NLP model generates the corresponding text output.

4. A TTS model converts the text output into voice output (some models support speech-to-speech, so text conversion isn’t always necessary).

5. The model extracts features from the voice output and static images to generate frames of the same duration that match the character’s appearance and the voice content. The lip movements, facial expressions, and head movements in the frames align with the voice content.

6. These frames are combined with the audio to produce a video, enabling video chat with the AI.

Here is the flow chart of the AI Video Chat Generation Process

Two Mainstream AI Video Chat Technologies

Currently, there are two ways to generate AI videos:

1. Wave2Lips + Video Template

2. AI Talking Head Model

What is Wave2Lips?

Wave2Lips is a model that can make a person’s lips in a video move in sync with any audio you provide.

How to use Wave2Lips to create AI Video Chat?

Wave2Lips can only make the lips of a person in an image move according to the audio content, so a video template is also needed.

A video template can be a few minutes of looping video with facial expressions and head movements to make the chat appear more natural.

You can also use some AI face-swapping to replace the model’s appearance in the video with another character you like.

Pros: Video templates offer great creative space for chat videos, allowing the video to show the upper body or even the whole body of the character.

Cons: Video templates can only loop for a certain period, so often the character’s expressions and movements do not match the audio content.

What is the AI Talking Head?

It’s a technology that makes a digital face talk and move like a real person. The “talking head” part refers to showing mainly the head and shoulders of a person speaking directly to the camera.

How does Talking Head Work?

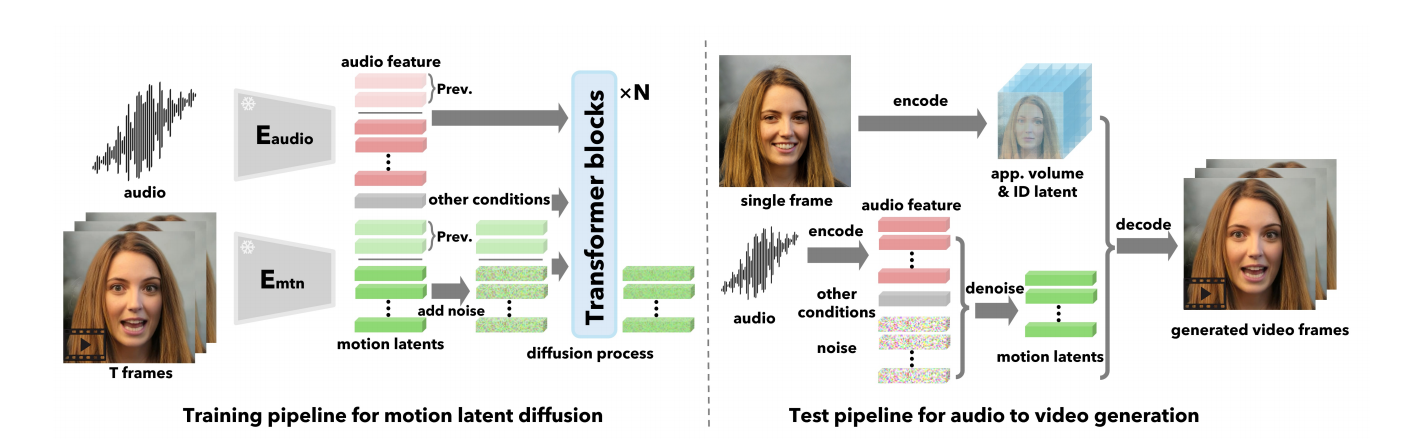

Currently, there are two main technologies for Talking Head. One method uses video to drive static images. The AI model learns the movements, facial expressions, and lip movements from the video and generates the corresponding video based on the character’s static image.

The challenge with this technology is that creating the driving video is not easy, it’s even more difficult than creating a video template.

The other method, as mentioned above, uses audio to drive static images. The audio can be generated in real-time by an AI model, enabling real-time video chat functionality.

Pros: Since the entire character’s lip movements, facial expressions, and head movements are generated by AI, the overall appearance is more harmonious, unified, and natural.

Cons: Currently, Talking Head technology can only focus on the character’s head and cannot generate hand or other body movements.

The only AI Video Chat Apps on the Market

SoulFun

SoulFun is currently one of the few products that truly enables AI Video Chat.

Unlike other products that only animate the character’s head, it uses both Wave2Lip2 + Templates and AI Talking Head technologies. This allows you to have lively AI video chats with all the characters within the app.

Additionally, it supports user-uploaded images, enabling direct video chats with characters from those images.

TalkFlow

Another app that supports real-time AI video chat like SoulFun is TalkFlow. Currently, it is available only on iOS and Android, with no web version.

In addition to text chatting, it supports real-time video calls.

Once you connect, the characters use AI talking head technology to animate, providing facial expressions and feedback, making it feel like you’re chatting with a real person.

Moreover, TalkFlow allows you to upload images and transform the characters in static pictures into videos.

Final Verdict

As technology continues to develop, I believe that in the future, people will be unable to distinguish whether the person on the other side of the video is a soulful human or a virtual digital person. This type of application is bound to become increasingly popular.

Leave a Reply